Table of Contents

Context: Addressing the B20 Summit India in New Delhi, the Indian Prime Minister called for a global framework to ensure the ethical use of artificial intelligence.

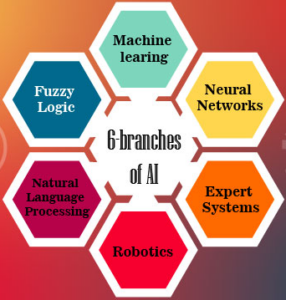

What is Artificial Intelligence?

- AI is the branch of computer science concerned with developing machines that can complete tasks that typically require human intelligence.

- The goals of artificial intelligence include computer-enhanced learning, reasoning, and perception.

- Artificial intelligence is based on the principle that human intelligence can be defined in a way that a machine can easily mimic it and execute tasks, from the simplest to those that are even more complex.

We’re now on WhatsApp. Click to Join

Some Applications of AI

- Healthcare: Companies are applying machine learning to make better and faster medical diagnoses than humans.

- Other AI applications include using online virtual health assistants and chatbots to help patients and healthcare customers find medical information, schedule appointments etc.

- Business: Machine learning algorithms are being integrated into analytics and customer relationship management (CRM) platforms to uncover information on how to better serve customers.

- Education: In classrooms and training centres, AI-powered adaptive learning tailors educational content to each student’s needs, while plagiarism detection ensures academic integrity.

- Agriculture: Farmers and scientists are using AI to monitor crops, predict yields and check pests.

- AI-enabled precision farming helps farmers make data-driven decisions so they can optimize irrigation, improve fertilization and reduce waste.

- Security: Law enforcement agencies and cybersecurity firms can use AI for facial recognition, surveillance and threat detection. These technologies enhance public safety and combat cybercrime by identifying and neutralizing potential threats in real-time.

- Space Exploration: Scientists are already using AI for spacecraft navigation, satellite imaging, mission planning and identifying new astronomical phenomena.

Various Concerns Associated with the AI

- Unpredictable nature: Artificial General Intelligence (AGI) can self-learn and go beyond human intelligence, raising concerns about predictability and security.

- Errors in outcome: AI tools have already caused problems such as mistaken arrests due to Facial Recognition Software, and unfair treatment due to biases built into AI systems.

- Inaccurate content: Chatbots are based on large language models like GPT-3 and 4, thus creating content that may be inaccurate or use copyrighted material without permission.

- Emergence of deepfakes: The emergence of easy-to-use AI tools that can also generate realistic-looking synthetic media known as deepfakes.

- For instance, deepfake videos of many celebrities raise concern over AI’s frightening power and creative possibilities.

- Lack Of Transparency: Numerous AI models function opaquely, obscuring the rationale behind their decisions.

- For instance, a healthcare AI system may suggest a particular treatment option without being able to articulate the basis for its recommendation.

- Misuse: AI systems can be purposefully programmed to cause death or destruction, either by the users themselves or through an attack on the system by an adversary.

- For instance, adversarial attacks can manipulate AI models posing risks in critical domains such as autonomous vehicles or healthcare.

- Cyber security concerns: AI could potentially be hacked, enabling bad actors to interfere with energy, transportation, early warning or other crucial systems.

- For instance, AI can be used to automate and improve cyberattacks, making them harder to detect and defend against.

- Associated emissions: Training a single AI system can emit over 250,000 pounds of carbon dioxide.

- Job Losses: AI is resulting in more automation, which will eliminate jobs in almost every field.

- According to the World Economic Forum (WEF), AI would likely take away 85 million jobs globally by 2025.

- Ethical issues: Currently, AI-powered weapons and vehicles have some sort of human control. This is going to change in future when whole decision-making will be made by machines.

- For instance, an AI loan approval system trained on data skewed towards wealthier demographics might deny loans to qualified applicants from disadvantaged communities.

Why Global Regulation of AI is a Challenge?

- Lack of legal definition: To regulate AI well, we must define AI and understand anticipated AI risks and benefits.

- Legally defining AI is important to identify what is subject to the law but AI technologies are still evolving, so it is hard to pin down a stable legal definition.

- Weighing risk-benefits: Understanding the risks and benefits of AI is also important. Good regulations should maximize public benefits while minimizing risks.

- However, AI applications are still emerging, so it is difficult to know or predict what future risks or benefits might be.

- Adaptability: Lawmakers are often too slow to adapt to the rapidly changing technological environment. Without new laws, regulators have to use old laws to address new problems.

Global Regulations for AI

| Country | Details |

| European Union |

|

| United Kingdom |

|

| United States |

|

| China |

|

AI Laws in India

- India does not have any specific law regarding the application of AI.

- The Ministry of Electronics and Information Technology (MeitY) is the regulatory body of AI in India.

- It has the responsibility for the development, implementation and management of AI laws and guidelines in India.

- There are certain provisions mentioned under Intellectual Property Law and several provisions as Section 43A & 72A of the Information Technology Act, 2000 which implies that if anyone commits a crime by using AI, then he will be liable under the IT Act, criminal law and other cyber law.

- Information Technology (Intermediary Guidelines and Digital Media Ethics Code) Rules 2021 obligates social media platforms to exercise greater diligence regarding content on their platforms.

- In 2018, the Planning Commission of India came up with the National Strategy on Artificial Intelligence (NSAI) considering setting up a panel consisting of the Ministry of corporate affairs & the Department of Industrial Policy 7 promotion to look over the regulation.

- Niti Aayog also worked towards the establishment of AIRAWAT– AI Research, Analytics and Knowledge Assimilation platform. AIRAWAT considers the requirements for better use of AI.

- In 2020, Niti Aayog drafted documents based on launching an oversight body and enforcement of responsible AI principles (Safety & rehabilitee, equality, inclusivity, non-discrimination, privacy & security, transparency, accountability, protection & reinforcement of human values) for Inspection of principles, formation of legal and technical work, creation of new techniques and tools of AI and representation of India at Global standard.

Way Forward

- A regulation that enables AI to be used in a way that helps the society, while preventing its misuse will be the best way forward for regulating this dual-use technology.

- In this regard, producer responsibility will be the best way to regulate AI. Companies must take active measures to prevent misuse of their product.

- Global AI needs global cooperation. Shared standards and regulations, like those discussed in the G7 Hiroshima AI Process (HAP), are crucial to ensure ethical development and consistent application across borders.

- Regular audits of AI systems must be conducted to ensure that they are aligned with ethical principles and values.

Over 70% of Indian companies started using AI in their machinery for effective work with fewer workforces. Therefore, with the increasing use of AI in all sectors, there is a need to regulate AI as we know that AI has very potential and power then it may cause risks to privacy and humanity.

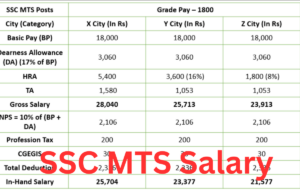

SSC MTS Salary 2025, Check Highest Salar...

SSC MTS Salary 2025, Check Highest Salar...

F-35 Fighter Jet Stranded in Kerala: Dis...

F-35 Fighter Jet Stranded in Kerala: Dis...

Quad Summit 2025: Key Announcements, Str...

Quad Summit 2025: Key Announcements, Str...