Table of Contents

Context: The Supreme Court in August 2025 directed the Union government to draft guidelines for regulating social media, in consultation with the National Broadcasters and Digital Association (NBDA). The matter arose from derogatory remarks made in a stand-up comedy skit about persons with Spinal Muscular Atrophy (SMA), leading to FIRs in Maharashtra and Assam.

Need for Regulation of Commercial Speech on Digital Platforms

- Vulnerable groups targeted: Humour or satire may perpetuate stereotypes against persons with disabilities, women, or minorities.

- Amplified impact: Digital platforms magnify offensive content, increasing its social harm compared to private speech.

- Profit-driven speech: Monetisation incentivises sensationalism and provocative content, often at the cost of sensitivity.

- Public order concerns: Derogatory or divisive content can spark unrest, as seen in multiple FIRs across states.

- Accountability gap: Platforms host speech but are not always transparent in moderating or removing harmful content.

Existing Regulatory Framework

Constitutional Provisions

- Article 19(1)(a) guarantees freedom of speech.

- Article 19(2) allows reasonable restrictions on specific grounds: sovereignty, public order, decency, morality, defamation, etc.

- Dignity is not an independent ground but is indirectly protected through defamation and decency clauses.

Statutory Provisions

- Bharatiya Nyaya Sanhita (BNS), 2023: Penalises defamation, hate speech, and incitement.

- IT Act, 2000:

- Section 69A permits blocking of online content in the interest of sovereignty, public order, etc.

- Blocking Rules, 2009, provide a mechanism, though opaque.

- Other laws: Criminal defamation, obscenity laws, and FIR mechanisms are available.

Judicial Precedents

- Sakal Papers v. Union of India (1962) – Freedom of circulation is part of free speech.

- Tata Press v. MTNL (1995) – Commercial advertisements protected as free speech.

- Subramanian Swamy v. Union of India (2016) – Criminal defamation upheld to protect dignity.

- Imran Pratapgadhi case (2025) – Court upheld that free speech includes offensive or disturbing views.

Challenges in Regulation

- Vagueness of Standards: Grounds like “dignity” are undefined and subjective, leading to arbitrary censorship.

- Opacity in Enforcement: Takedowns under Section 69A IT Act often occur without notice to content creators, violating natural justice.

- Chilling Effect on Free Speech: Over-regulation discourages comedians, satirists, and artists, undermining creativity and critique.

- Judicial Overreach and Institutional Boundaries: Court directing the executive to frame rules risks blurring separation of powers and gives such regulations added legitimacy.

- Polyvocal Judiciary: Divergent observations by different Benches create uncertainty; conflicting judgments reduce clarity in law.

- Exclusionary Consultation: Often, regulatory frameworks consult industry lobbies but exclude creators, civil society, and vulnerable groups directly impacted.

Way Forward

- Strengthen Existing Mechanisms: Improve transparency in IT Act takedowns – mandatory notice, opportunity to appeal, and publication of orders.

- Avoid Overreach: Keep restrictions within Article 19(2); resist adding vague grounds like “dignity” as standalone limits.

- Safeguard Creativity: Protect the space for satire, art, and comedy that challenge social norms, while addressing targeted abuse.

- Inclusive Consultation: Include creators, disability rights groups, civil society, and digital rights experts alongside broadcasters.

- Institutional Clarity: Regulation must be executive-led with parliamentary oversight, not judicially mandated frameworks.

- Review and Oversight Mechanisms: Establish independent review boards to oversee takedowns, prevent abuse, and ensure proportionality.

| Global Models |

|

Advanced Air Defence Radars: Types, Comp...

Advanced Air Defence Radars: Types, Comp...

Ion Chromatography, Working and Applicat...

Ion Chromatography, Working and Applicat...

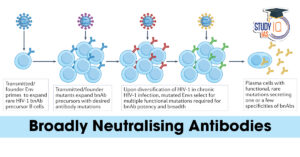

Broadly Neutralising Antibodies (bNAbs):...

Broadly Neutralising Antibodies (bNAbs):...